The rise of AI tools forces schools to reconsider what counts as cheating

Technology

Many of the teaching and assessment tools have been used for generations are no longer effective

(AP) - The book report is now a thing of the past. Take-home tests and essays are becoming obsolete.

Student use of artificial intelligence has become so prevalent, high school and college educators say, that to assign writing outside of the classroom is like asking students to cheat.

“The cheating is off the charts. It’s the worst I’ve seen in my entire career,” says Casey Cuny, who has taught English for 23 years. Educators are no longer wondering if students will outsource schoolwork to AI chatbots. “Anything you send home, you have to assume is being AI’ed.”

The question now is how schools can adapt, because many of the teaching and assessment tools that have been used for generations are no longer effective. As AI technology rapidly improves and becomes more entwined with daily life, it is transforming how students learn and study and how teachers teach, and it’s creating new confusion over what constitutes academic dishonesty.

“We have to ask ourselves, what is cheating?” says Cuny, a 2024 recipient of California’s Teacher of the Year award. “Because I think the lines are getting blurred.”

Cuny’s students at Valencia High School in southern California now do most writing in class. He monitors student laptop screens from his desktop, using software that lets him “lock down” their screens or block access to certain sites. He’s also integrating AI into his lessons and teaching students how to use AI as a study aid “to get kids learning with AI instead of cheating with AI.”

In rural Oregon, high school teacher Kelly Gibson has made a similar shift to in-class writing. She is also incorporating more verbal assessments to have students talk through their understanding of assigned reading.

“I used to give a writing prompt and say, ‘In two weeks, I want a five-paragraph essay,’” says Gibson. “These days, I can’t do that. That’s almost begging teenagers to cheat.”

Take, for example, a once typical high school English assignment: Write an essay that explains the relevance of social class in “The Great Gatsby.” Many students say their first instinct is now to ask ChatGPT for help “brainstorming.” Within seconds, ChatGPT yields a list of essay ideas, plus examples and quotes to back them up. The chatbot ends by asking if it can do more: “Would you like help writing any part of the essay? I can help you draft an introduction or outline a paragraph!”

Students are uncertain when AI usage is out of bounds

Students say they often turn to AI with good intentions for things like research, editing or help reading difficult texts. But AI offers unprecedented temptation, and it’s sometimes hard to know where to draw the line.

College sophomore Lily Brown, a psychology major at an East Coast liberal arts school, relies on ChatGPT to help outline essays because she struggles putting the pieces together herself. ChatGPT also helped her through a freshman philosophy class, where assigned reading “felt like a different language” until she read AI summaries of the texts.

“Sometimes I feel bad using ChatGPT to summarize reading, because I wonder, is this cheating? Is helping me form outlines cheating? If I write an essay in my own words and ask how to improve it, or when it starts to edit my essay, is that cheating?”

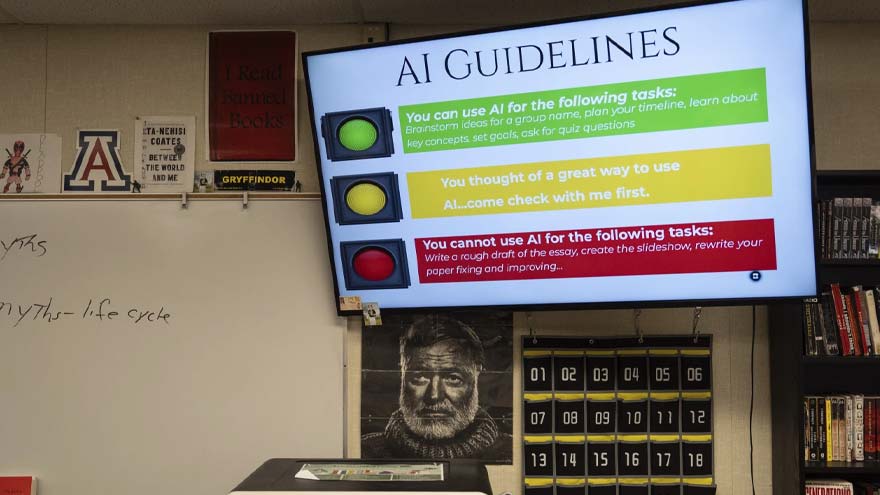

Schools tend to leave AI policies to teachers, which often means that rules vary widely within the same school. Some educators, for example, welcome the use of Grammarly.com, an AI-powered writing assistant, to check grammar. Others forbid it, noting the tool also offers to rewrite sentences.

Schools are introducing guidelines, gradually

Many schools initially banned use of AI after ChatGPT launched in late 2022. But views on the role of artificial intelligence in education have shifted dramatically. The term “AI literacy” has become a buzzword of the back-to-school season, with a focus on how to balance the strengths of AI with its risks and challenges.

Over the summer, several colleges and universities convened their AI task forces to draft more detailed guidelines or provide faculty with new instructions.

The University of California, Berkeley emailed all faculty new AI guidance that instructs them to “include a clear statement on their syllabus about course expectations” around AI use. The guidance offered language for three sample syllabus statements — for courses that require AI, ban AI in and out of class, or allow some AI use.

Enforcing academic integrity policies has become more complicated, since use of AI is hard to spot and even harder to prove, Fitzsimmons said. Faculty are allowed flexibility when they believe a student has unintentionally crossed a line, but are now more hesitant to point out violations because they don’t want to accuse students unfairly. Students worry that if they are falsely accused, there is no way to prove their innocence.