Examiners struggle to tell difference between answers written by AI and those from real human students

Technology

The AI achieved higher average grades than real students

(Web Desk) – A new study suggests AI chatbots are making cheating more efficient than ever.

Even experienced examiners now struggle to spot the difference between AI and real human students, researchers have found.

The experts from the University of Reading secretly added responses entirely generated by ChatGPT to a real undergraduate psychology exam.

And, despite using AI in the simplest and most obvious manner, unsuspecting markers failed to spot the AI responses in 94 per cent of cases.

Even more worryingly, the AI actually outperformed human students on average - achieving high 2:1 and 1st-level grades.

The rapid advancement of text-generating AIs such as ChatGPT has created a serious risk that AI-powered cheating could undermine the examination process.

To see just how bad this problem could be, associate Professor Peter Scarfe and Professor Etienne Roesch attempted to 'infiltrate' a real examination with AI.

The researchers created 33 fake student profiles which they registered to sit at-home online exams in various undergraduate psychology modules.

Using ChatGPT-4 the researchers created completely artificial responses to both short 200-word questions and entire 1,500-word essays.

These answers were then submitted alongside responses from real students on the School of Psychology and Clinical Language Sciences exam system.

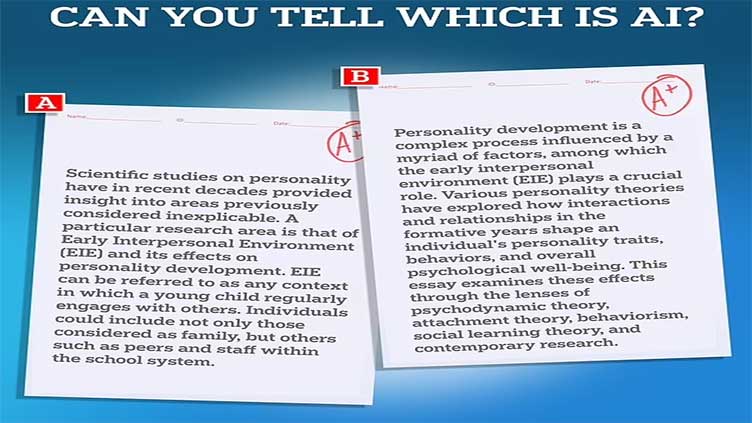

To show how difficult it can be to tell the difference MailOnline has generated our own example essays.

Due to data privacy the researchers weren't able to share any of exam answers with us but we have used their exact AI prompt to answer and example undergraduate psychology question using ChatGPT.

One of the pictured samples is generated by AI while the other is a human example taken from a University of South Australia essay writing guide.

None of the markers were aware that any experiment was taking place and there was nothing to indicate that the AI papers were any different.

Out of the 63 AI-generated papers submitted, only 6 per cent were flagged by examiners as potentially suspicious - but the remaining 94 per cent were completely unnoticed.

The AI achieved higher average grades than real students, in some modules outperforming their human classmates by a full grade boundary.

In 83 per cent of cases, the AI got grades that were better than a randomly selected set of students.