Robot taught to feed people who can't feed themselves

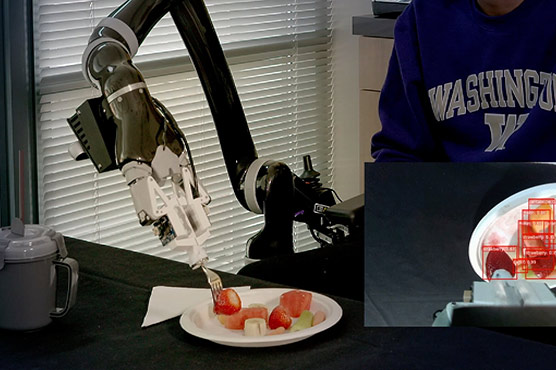

Robotic arm has a pincer hand equipped with a pressure sensor to prevent injury, to pick up a fork.

SEATTLE (Reuters) - "Great, here we go". With these words a robot lets a researcher at the University of Washington know that he is about to be fed.

The mechanical voice says: "Ready, make sure I can see your face" and a large piece of cantaloupe melon is placed directly in front of the recipient‘s mouth.

As soon as the fruit has been taken, the robotic arm goes through the whole process again.

Researcher Tapomayukh Bhattacharjee from the university‘s Robotic Lab in Seattle explains how it is all done.

"In terms of just the technology itself, we are using multi-modal sensing capabilities such as vision and haptic technology, which is the sense of touch, to be able to skewer a food item and feed a person," he told Reuters.

With one million adults in the United States needing someone to help them eat, according to 2010 data, the need is obvious. But as project lead Professor Siddartha Srinivasa explains, it is not the hardware that is unique in this project.

"A very big focus of our work is to be able to take existing hardware and be able to use artificial intelligence and machine-learning to be able to make it perform superhuman things," he said.

"I think it was very important for us that we could take the same robot arm, the same wheelchair that these users were very comfortable using and were using in their homes, and retrofit it with our technology - they were working with a familiar piece of hardware that was suddenly so much more capable because of the intelligence that was added into it," he added.

The robotic arm has a pincer hand that can pick up a fork that has been equipped with a pressure sensor to prevent injury.

That is the point where the off-the-shelf technology ends and the research begins.

A camera mounted on the robotic arm uses computer vision and machine-learning to recognise the pieces of fruit and follow verbal commands from the user.

The camera learns to recognise the user‘s eyes, nose and most importantly their mouth. It can adapt its movements even when the person moves.

According to Bhattacharjee the process is more challenging than it might appear.

"Eating free-form food is actually a very intricate manipulation task because this is a deformable object, they are hard to model and like it s in a cluttered plate," he said.

"We are trying to find what is the best way to pick up a food item, so that it is not only successful to pick up a food item but later on it is easier for the person to take a bite," he added.

The system is still in its early stages, as the robot utters "Oops" as the fork misses a strawberry during a demonstration.

The research team expects it to take years to perfect the system and so are taking an inclusive approach.

"To that end we are releasing open source code, open source hardware, open source benchmarks, so that we can create a community, an ecosystem of researchers who are excited about working on this problem of feeding for assistive care," Professor Siddartha Srinivasa said.

In the meantime, while there is no danger that the research team will go hungry, they do hope they can teach the robotic arm to pick up smaller pieces of food soon.